On May 2nd, 2017 our class did a field exercise at the community garden, just south of South Middle School, in Eau Claire, Wisconsin. The field exercise included multiple missions and platforms.

|

| Platform cases and GCP equipment |

Process

Prior to any missions being flown, we needed to conduct pre-flight checks and mission planning. We checked the equipment, to include all of the items that were in the previous report regarding mission planning, including checking batteries/equipment, weather, etc. When we were in the field, we had to use a MyFi (WiFi) to update the DJI firmware prior to starting the mission. DJI doesn't allow their drones to fly without the latest version.

Upon arrival, GCPs were set up around the garden and GPS data regarding said GCPs were recorded and inputted into the mission software. 9 points were set out around the garden, in a (generally) counter-wise pattern.

|

| GCP data aquisition |

|

| Zoomed in on level when recording GCP/GPS data |

|

| Garden with GCPs |

Two missions were conducted with a DJI Phantom 3 at 70 meters. The first mission was to survey the community garden. The second mission took oblique imagery around our vehicles. The DJI Inspire Pro flight was simple a demo of the platform, with no imagery taken.

|

| Inspire Platform |

On this particular day, winds, at times, exceeded 15mph. Takeoffs were done manually, and then the platforms were maneuvered, to insure serviceability, prior to initiating the planned missions. Once maneuvering was satisfactory, the planned missions were started. Once the missions were started, the platform and the software took over, and completed the entire missions, including landings, automatically. Even though there was autonomy in the missions, it was still important to have personnel monitor the platform in the air as well as have personnel monitor the display on the controller. Monitoring these factors allow for quick reaction time to anything unexpected that may have occurred.

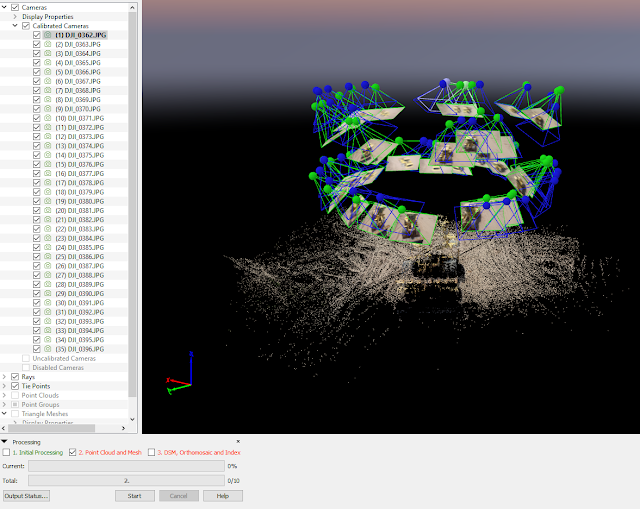

Once harvested, DR. Hupy processed the imagery from both missions in Pix4D.

Maps

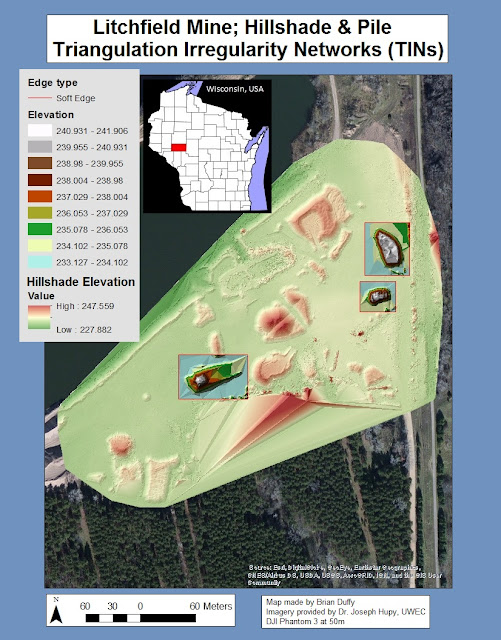

This map shows the elevation of the study site. Besides the trees that border the garden, one can see that the elevation of the entire site is fairly level.

This orthomosaic shows the entire study site as a combined aerial image.

Conclusion

Getting some experience in the field is the best way to learn the process (pre-flight, GCPs, mission planning, adjustments, etc.). The most important thing is to make sure you've gone through your checklist, and be prepared for just about anything.

Most missions will not go exactly as planned, and UAS personnel need to be flexible and adapt to any situation. In this case, we couldn't conduct the mission until the DJI Phantom 3 firmware was updated. It took perhaps 20-30 minutes to download and install the firmware. Without WiFi hot-spot equipment, we would not have been able to conduct the mission.

This was a great learning experience!